Uncategorized

Automatic speech recognition predicts contemporary earthquake fault displacement

Seismic data and GNSS

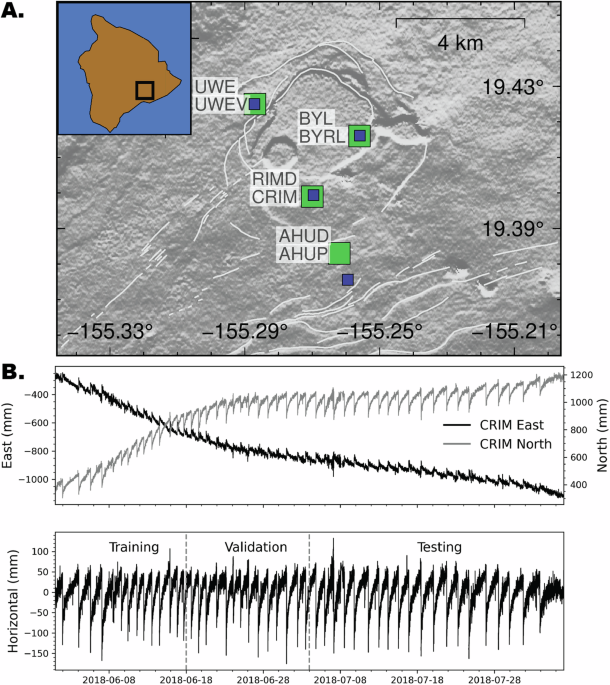

The Hawaiian Volcano Observatory operates a permanent seismic network with multiple stations grouped with high-speed GNSS receivers (Figure 1). The analysis is performed using data recorded between 1 June 2018 and 2 August 2018 when approximately 50 ~ Mw5 earthquakes occurred with progressive caldera collapse. The seismic-GNSS station pairs RIMD-CRIM, BYL-BYRL and UWE-UWEV are located on the edge of the caldera, and AHUD-AHUP is located south of the caldera with the AHUP GNSS monument located approximately 0.5 km from the seismic sensor.

High-speed GNSS solutions are processed for the KOSM station with an accuracy of 5 seconds. The displacement time series of the east-north GNSS components are integrated with a horizontal volume such that \(\sqrt{eas{t}^{2}+nort{h}^{2}}\). Long-term ground motion is determined by applying a 10-day-wide filter and this trend is removed to focus only on displacements associated with successive collapse events (1-3 day recurrence interval). GNSS data are downsampled by the average of 6 successive windows to obtain a 30 s displacement sampling rate time series. To reduce high-frequency fluctuations, the period between each collapse is smoothed using a 5-minute window.

Continuous 3-component broadband seismic records (HH channel at 100 Hz) are of high quality without long periods of missing data. For each trace the instrument response to velocity is deconvolved, demodulated, filtered between 0.1-50 Hz, and combined into a continuous time series. The number of missing data points is negligible and an interpolated value is included to preserve the sample rate. The data traces are divided into non-overlapping 30-second windows (3000 points) that are temporally consistent with the GNSS time series interval such that each 30-second seismic wave segment corresponds to a ground displacement measurement.

Wav2Vec-2.0 self-supervised pre-training model

The modified Wav2Vec-2.0 deep learning model extends our previous efforts in applying laboratory data to predict near-future fault friction using a convolutional encoder-decoder model to the more challenging task of predicting surface displacement on a seismic fault. For this task, we present a self-supervised learning technique developed for automatic speech recognition in an updated workflow. Specifically, we implement the Wav2Vec-2.0 model as briefly described in the introduction section, and in detail as follows.

The application here exploits the power of Wav2Vec-2.0 which uses self-supervised learning of latent vector representations from raw audio waveforms. The model outperforms supervised and semi-supervised methods on a speech recognition task using limited amounts of labeled data. Our mission is to determine whether the model is able to successfully extract latent vector representations from the raw waveforms of continuous seismic emissions from the collapse event sequence at Kīlauea, and to determine whether these extracted latent vectors can predict ground displacement in the near future. To accomplish this final task, we serially attach our pre-designed transformer decoder model following the Wav2Vec-2.0 model and fine-tune the entire model with limited amounts of displacement data. Here we use HuggingFace39's Transformers library and adopt all model configurations and hyper-training parameters in the Speech Pretraining example into HuggingFace's Transformers library. The Wav2Vec-2.0 model consists of a feature encoder with 7 temporal convolutional layers. We implement the 12-layer converter BASE configuration described in Ref. 32. The self-supervised learning task is to mask approximately 49% of the data points for each window of the input waveform, and the model learns to reconstruct the masked part from the visible part. The waveform is encoded into a series of vectors in a codebook trained simultaneously. Instead of directly reconstructing the waveform data, the model attempts to select the correct latent vectors for the masked part from among 100 candidate vectors. The total number of trainable parameters in the model is 95.05 million. The loss consists of the variance loss associated with the self-supervised learning task, and the variance loss in the learnable codebook.

We collect all training waveforms (about 16 days of data) from the four seismic stations (RIMD, UWE, BYL, AHUD) as our pre-training dataset, and we collect all test waveforms (about 16 days of data) from the four seismic stations To verify. Each sample in a batch consists of 300 seconds recorded at 100 Hz (30,000 data points) with 3 channels (east-north-vertical) for 90,000 data points. Assuming the batch size is 8, the form of the input batch tensor is [8 (batch size), 30,000 (data points), 3 (channels)]we pass the waveform of each channel independently to the Wav2Vec 2.0 model by resampling the input batch to [24 (waveform samples) = 8 (batch size) * 3 (channels), 30,000 (data points)]. Each waveform is standardized to zero mean and unit variance individually. This standardization for each sample performs better than standardization for each data set. The learning rate is linearly increased over 32,000 training steps up to 0.001 and then linearly decomposed. AdamW40 optimizer is applied. The model was trained using 8 Nvidia Tesla V100 GPUs on a single node. The batch size per GPU is 8, resulting in an effective batch size of 64 per step. The model training performance uses the masked reconstruction accuracy on the waveform data for validation after each training epoch. The model with the lowest variance loss is saved for validation. Early stopping is applied when the validation loss is at least 10 periods.

Fine-tuning the pre-trained model and the Wav2Vec-2.0 indicator

The model outputs are produced using a transformer decoder that we refer to as the Wav2Vec-2.0 predictor. We use the standard fine-tuning procedure of Wav2Vec-2.0 that freezes the feature extractor part containing 7 convolutional layers, and the transformer encoder part of the model is fine-tuned with our final transformer decoder. Specifically, the pre-training model parameters are fine-tuned using the input waveforms and target displacements recorded at the RIMD seismic station and the GNSS CRIM station. We apply the same layer structure used in Wav2Vec-2.0.

The transformer decoder 30 is modeled for current and near future forecasts using Wav2Vec-2.0 as the signal encoder. An input window of time length \({t}_{in}^{hist}\) contains 3 channels of the seismic wave record, for example, with \({t}_{in}^{hist}=300s\) The batch size, 8, is the form of the input tensor [8 (batch size), 30,000 (data points), 3 (channels)]. The input waveforms are resampled to [24 (waveform samples), 30,000 (data points)] As in the pre-training step and then processed by the pre-trained Wav2Vec-2.0 model to obtain the latent representation vectors for each individual waveform individually. [24 (waveform samples), 93 (time steps), 768 (vector dimension)]. These vectors are projected onto a vector embedding space with a quantity with a dimension size of 256 for a tensor form [24, 93, 256]which is the input of key/value vectors to the transformer decoder. The transformer decoder consists of multiple attention and feed-forward network layers, applying layer normalization first and using a Gaussian error linear unit (GELU) activation function. We tested the number of successive layers of 1, 2, 3, 4 and found that the number did not affect the results. In the case of future prediction, the sampling rate of the target offset is 0.0333 Hz (every 30 s), and an output window of time length \({t}_{out}^{future}\) requires prediction \({t}_{out }^{future}/30s\) future data points. For example, when using \({t}_{out}^{future}=60s\) to predict two future displacements at 30 seconds and 60 seconds respectively, we input a query vector of the form [24, 2, 256] To decode transformers. The query vector is from a learnable local embedding layer with a maximum of 10, to note the maximum output length is 300 seconds in this work. So the output from the decoder is the same format [24, 2, 256]. It is reconfigured into 3 channels for each input waveform [8 (batch size), 3 (channels), 2, 256] And then channel average to figure [8, 2, 256]. The 10% dropout layer and the linear layer display the final dimension of the vector to the final displacement output of the figure [8, 2, 1]. The loss function applied is the mean square error (MSE). The optimizer parameters and computing settings are identical to the pre-training. The number of trainable parameters in the model is 91.42 million. We use the model with the best R2 and stop fine-tuning early if the validation loss does not improve for 10 periods.

Searches the network for input/output time lengths

We systematically test the contemporary and near-future forecasting capabilities of the Wav2Vec-2.0 forecast by applying different input and output window lengths using RIMD-CRIM station data. Window lengths range from 30 to 300 seconds, and the step size is 30 seconds. Training Wav2Vec-2.0 predictions starts with the same pre-trained Wav2Vec-2.0 model and is fine-tuned using sets of input/output window length pairs as shown in the format Xs-Ys. For the grid search, we first fine-tune ten models to predict future displacement at 30 s using input Xs-30 s seismic windows, X = 30, 60, 90, …, 270, 300; As shown in the first column of Supplementary Figure S1. Wav2Vec 2.0 prediction provides output window size flexibility by varying the query positional embedding vector in the transformer decoder, e.g., Fig. [24, Y/30, 256]While maintaining the same model structure. Thus for a fixed input length In this procedure, we start with the fine-tuned weights of the Xs-30s model and use the trained weights of the Xs-Ys model to start training the subsequent Xs-(Y+30)s model. This is repeated for the range of time periods tested.

|

Sources 2/ https://www.nature.com/articles/s41467-025-55994-9 The mention sources can contact us to remove/changing this article |

What Are The Main Benefits Of Comparing Car Insurance Quotes Online

LOS ANGELES, CA / ACCESSWIRE / June 24, 2020, / Compare-autoinsurance.Org has launched a new blog post that presents the main benefits of comparing multiple car insurance quotes. For more info and free online quotes, please visit https://compare-autoinsurance.Org/the-advantages-of-comparing-prices-with-car-insurance-quotes-online/ The modern society has numerous technological advantages. One important advantage is the speed at which information is sent and received. With the help of the internet, the shopping habits of many persons have drastically changed. The car insurance industry hasn't remained untouched by these changes. On the internet, drivers can compare insurance prices and find out which sellers have the best offers. View photos The advantages of comparing online car insurance quotes are the following: Online quotes can be obtained from anywhere and at any time. Unlike physical insurance agencies, websites don't have a specific schedule and they are available at any time. Drivers that have busy working schedules, can compare quotes from anywhere and at any time, even at midnight. Multiple choices. Almost all insurance providers, no matter if they are well-known brands or just local insurers, have an online presence. Online quotes will allow policyholders the chance to discover multiple insurance companies and check their prices. Drivers are no longer required to get quotes from just a few known insurance companies. Also, local and regional insurers can provide lower insurance rates for the same services. Accurate insurance estimates. Online quotes can only be accurate if the customers provide accurate and real info about their car models and driving history. Lying about past driving incidents can make the price estimates to be lower, but when dealing with an insurance company lying to them is useless. Usually, insurance companies will do research about a potential customer before granting him coverage. Online quotes can be sorted easily. Although drivers are recommended to not choose a policy just based on its price, drivers can easily sort quotes by insurance price. Using brokerage websites will allow drivers to get quotes from multiple insurers, thus making the comparison faster and easier. For additional info, money-saving tips, and free car insurance quotes, visit https://compare-autoinsurance.Org/ Compare-autoinsurance.Org is an online provider of life, home, health, and auto insurance quotes. This website is unique because it does not simply stick to one kind of insurance provider, but brings the clients the best deals from many different online insurance carriers. In this way, clients have access to offers from multiple carriers all in one place: this website. On this site, customers have access to quotes for insurance plans from various agencies, such as local or nationwide agencies, brand names insurance companies, etc. "Online quotes can easily help drivers obtain better car insurance deals. All they have to do is to complete an online form with accurate and real info, then compare prices", said Russell Rabichev, Marketing Director of Internet Marketing Company. CONTACT: Company Name: Internet Marketing CompanyPerson for contact Name: Gurgu CPhone Number: (818) 359-3898Email: [email protected]: https://compare-autoinsurance.Org/ SOURCE: Compare-autoinsurance.Org View source version on accesswire.Com:https://www.Accesswire.Com/595055/What-Are-The-Main-Benefits-Of-Comparing-Car-Insurance-Quotes-Online View photos

to request, modification Contact us at Here or [email protected]