Health

Predicting early Alzheimer’s with blood biomarkers and clinical features

Alzheimer’s Disease Neuroimaging Initiative (ADNI)

Data used in this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu). ADNI is a public-private partnership in 2003, led by Principal Investigator Michael W. Weiner, MD with the main goal of testing whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment could track the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). For up-to-date information, please see http://www.adni-info.org. In addition to MRI and PET neuroimaging of patients at regular intervals, ADNI has collected and analyzed whole blood samples for genotyping and gene expression analysis. Table 4 summarizes the genotyping data provided by ADNI. Blood gene expression profiling was conducted using Affymetrix Human Genome U219 Array for 744 patients in the ADNI2/ADNI-GO phase24. For this study, the authors utilised all the participants for whom both genetic and gene expression data were available in ADNI (623 participants). The selected cohort comprises 212 participants with a baseline diagnosis of cognitively normal (CN) and 411 participants with a diagnosis of Mild Cognitive Impairment (MCI) or Alzheimer’s Disease (AD), grouped together.

The ADNI clinical data included patient demographics, brain functioning scores, neuropsychological test scores, and MRI volume measurements. Demographic information such as age, gender, ethnicity, education, and marital status have been included. The APOE4 variable indicates the presence of the APOE-\(\epsilon 4\) allele, a known AD risk factor. PET measures for brain function include variables such as fluorodeoxyglucose (FDG), Pittsburgh compound B (PIB), and Florbetapir (AV45). Amyloid-\(\beta \), tau and p-tau levels in cerebrospinal fluid (CSF) are indicated by the ABETA, TAU, and PTAU variables. The clinical dementia rating sum of boxes (CDRSB) variable provides the sum of all cognition and function scores from the Clinical Dementia Rating test. ADAS and MOCA are neuropsychological test variables used to assess cognitive capacity. The Mini-Mental State Exam (MMSE) variable reflects disease progression and cognitive changes over time. Rey’s Auditory Verbal Learning Test (RAVLT) variable is a neuropsychological test to examine episodic memory. Logical Memory-Delayed Recall Total Number of Story Units Recalled (LDELTOTAL) is another variable from neuropsychological tests that assesses an individual’s ability to remember information after some time. TRABSCOR variable denotes the time required to complete neuropsychological tests. Functional Activities Questionnaire (FAQ) assesses an individual’s reliance on others to perform daily life activities. Everyday cognitive evaluations (Ecog) are questionnaires used to assess the patient’s ability to perform daily tasks. The hippocampus, intracranial volume (ICV), Mid Temporal, Fusiform, Ventricles, Entorhinal, and Whole Brain are structural MRI variables. The Modified Preclinical Alzheimer Cognitive Composite (mPACC) variable assesses cognition, episodic memory, and time needed to complete tasks.

Data preprocessing

Among the participants, 623 unique individuals randomly provided whole blood samples for gene expression assays at specific time points. Consequently, we selected these 623 patients and concurrently extracted their clinical data at the time of whole blood sample collection. Upon evaluation, the clinical data revealed that the majority of variables were not missing for most individuals or, at most, a small number of them. The ADNI dataset had the PIB and DIGISCOR variables missing for over 90% of individuals and were therefore removed. Around 35% of the selected participants do not have CSF biomarker variables (ABETA, TAU, PTAU). MRI variables are missing in 17% of the individuals, and FDG with AV45 variables are missing in 16% of the individuals. Therefore, missing data were identified and imputed with Multivariate Imputation By Chained Equations (MICE) using scikit-learn package in Python60. Imputation was performed on the training data and then applied to the test data. Supplementary Fig. S1 shows all variables and the number of missing values in each variable.

For performance purposes, the genotyping data utilized in this study comprises the top 121 markers reported in a previous 2023 study61. Exact feature list can be found in Supplementary Fig. S2. As reported, the “bim,” “bed,” and “fam” files are the three files that make up the original plink file format of the dataset. Subject characteristics are documented in the “fam” file. The location, name, and allele representation of SNPs (features) are kept in the “bim” file. Lastly, “bed” files provide machine codes that are unintelligible to humans. These codes are composed of 8-bit codes that map the data between fam and bim files and represent the genotype codes61.

Machine learning pipeline

The machine learning model development workflow is depicted in Fig. 5. The scikit-learn package in Python programming language v3.9.12 was used to develop the models. Further details of the workflow are discussed in the following sections.

Preparation of input data

Different combinations of clinical data, gene expression, and SNP data were integrated and utilized to train the model as can be seen in Fig. 5. This training process involved a two-step cross-validation approach: first, a stratified five-fold cross-validation was conducted in the outer loop, followed by an additional stratified three-fold cross-validation in the inner loop. Target stratification was used in both the outer and inner loops to maintain the proportions of MCI/AD and CN participants. During the inner loop, MICE imputation is performed on the “training data”, Min-Max scaling is then applied before transforming the test dataset. Our dataset contains twice as many MCI/AD as CN participants (411 versus 212 cases) and is therefore unbalanced. This was addressed via utilizing the Synthetic Minority Oversampling Technique (SMOTE).

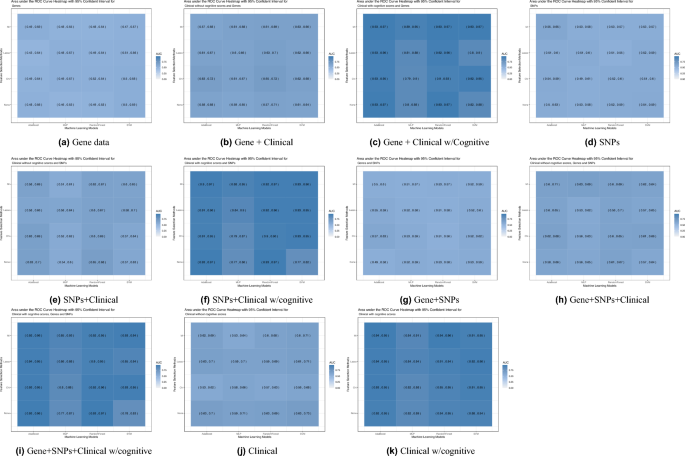

Four types of input data (SNPs, gene expression, clinical data, and clinical data without cognitive scores) were used separately and combined to create single modal and multi-modal data inputs for the machine learning models. Multi-modal inputs include paired combinations of the four inputs, as well as the combination of all four together. The features in multi-modal inputs were merged before feature selection.

Feature selection

In this study, three different feature selection methods were carried out on both single-model and multi-modal inputs, namely Chi-square, mutual information (MI), and Least Absolute Shrinkage and Selection Operator (LASSO). Additionally, a parallel analysis was conducted, wherein no feature selection was implemented. Before the selection process, there were a total of 45 clinical features, 2,702,858 SNPs, and 19,403 gene expression features available. From the SNPs and gene expression features, 121 top features were chosen based on a well-established and previously published study61 enhancing the robustness of our genetic data. The number of features selected by each method varies as it is an optimized hyperarameter. The features selected by each method and feature selection method are provided in Supplementary Data 1.

Classification models and hyperparameter optimization

To binary classify the subjects as either CN or MCI/AD, Support Vector Machine (SVM), Random Forest (RF), AdaBoost (AB), and Multi-Layer Perceptron classifier (MLP) models were implemented. Bayesian optimization with five-fold cross-validation and repeated stratified three-fold Cross-Validation was used to fine-tune the hyperparameters with 100 trials for each fold to maximize the auc value on the validation set. We simultaneously optimizing the hyperparameters for both feature selection and model parameters. For the SVM model, the cost (C), gamma (\(\gamma \)), kernel, and class weight hyperparameters were optimized such that the cost ranged between 0.1 and 1000, and gamma values between 0.0001 and 0.001. The choice of the kernel was among linear, polynomial, radial basis function (RBF), or sigmoid and class weight was set to either balanced or none.

For the RF model, the maximum tree depth, maximum number of features, split quality criterion, and class weight were optimized. The maximum tree depth values were set at 3, 5, 7, and none, maximum feature numbers included the square root and log (base 2) of the number of features, as well as the total number of features. The criterion was Gini impurity, logistic loss, and entropy. Lastly, the class weight was set to be balanced, balanced subsample, and none.

For AB, the number of estimators, learning rate, and algorithm were optimized. The number of estimators was set at 50, 100, and 200. The learning rate was set at 0.1, 0.01, and 0.001. For the algorithm, we utilized Stagewise Additive Modeling using a Multi-class Exponential loss function (SAMME) and SAMME.R (which outputs class probabilities instead of discrete values 0 or 1).

For the MLP classifier, the hidden layer sizes, activation function, weight optimizer, L2 regularization strength, and learning rate were optimized. The hidden layers depth ranged from 10 to 100 with an increment of 10. The weight optimization algorithm choices included adaptive moment estimation(Adam), stochastic gradient descent (SGD), and Limited-memory Broyden-Fletcher-Goldfarb-Shanno (LBFGS). The learning rate was either set as a constant or specified according to adaptive or inverse scaling methods. The L2 strength values were set as 0.01, 0.001, or 0.0001.

The selected hyperparameters for each dataset and the extracted features are shown in Supplementary Data 2.

Model performance metrics

Model performance is evaluated using the receiver-operator characteristic curve (ROC-AUC) and accuracy. The formulae for accuracy is shown below:

$$\begin{aligned} Accuracy = \frac{TP + TN}{TP + TN + FP + FN} \end{aligned}$$

(1)

Here, the number of correctly predicted MCI/AD cases are True positive (TP), and CN participants incorrectly predicted as MCI/AD are assigned False Positive (FP). Correctly predicted CN participants are True Negative (TN), while MCI/AD participants wrongly predicted as CN are assigned False Negative (FN).

SHAP model interpretation

Lundberg and Lee35 have proposed SHapley Additive exPlanations (SHAP) technique to explain model predictions, which computes a unified measure of feature importance using game theory. To calculate SHAP values, each feature’s contribution to the predicted value is estimated by comparing predictions over different combinations of features. The SHAP value for a feature is the average of all the marginal contributions to predictions from all possible feature combinations. SHAP values indicate the magnitude of difference that each feature makes to the final predicted value, starting from a base expected value. SHAP values were computed for the best-performing model to identify features that have the highest impact on model performance and are therefore potential biomarkers for prodromal and advanced Alzheimer’s. Genes were extracted from the most influential SHAP features, and a comprehensive review of the existing literature was conducted to establish connections between our findings and evidence.

Stratified case studies with SHAP

To examine the insights provided by SHAP, the cohort was stratified by age in 10 years interval from 50 to 90 years old. This stratified examination also provides nuanced insights into whether Age assumes a more prominent role in discriminating between the two classes within specific age brackets, potentially signifying a stronger association with AD development or progression in late adulthood. A feature importance plot was generated for each selected age-group, allowing for a comprehensive understanding of the impact of each feature on the final prediction outcome.

Statistical analysis

Differences in clinical features between CN and MCI/AD participants were analyzed using statistical tests for significance. The t-test was used for parametric continuous variables (with equal variance assumption), while the Mann-Whitney U test was used for non-parametric continuous variables. The Chi-square \((\chi ^2)\) test was used to test categorical variables hypotheses (with continuity correction), while Fisher’s exact test was used for smaller sample sizes (small cell counts). All statistical tests were performed at the 95% significance level.

|

Sources 2/ https://www.nature.com/articles/s41598-024-56489-1 The mention sources can contact us to remove/changing this article |

What Are The Main Benefits Of Comparing Car Insurance Quotes Online

LOS ANGELES, CA / ACCESSWIRE / June 24, 2020, / Compare-autoinsurance.Org has launched a new blog post that presents the main benefits of comparing multiple car insurance quotes. For more info and free online quotes, please visit https://compare-autoinsurance.Org/the-advantages-of-comparing-prices-with-car-insurance-quotes-online/ The modern society has numerous technological advantages. One important advantage is the speed at which information is sent and received. With the help of the internet, the shopping habits of many persons have drastically changed. The car insurance industry hasn't remained untouched by these changes. On the internet, drivers can compare insurance prices and find out which sellers have the best offers. View photos The advantages of comparing online car insurance quotes are the following: Online quotes can be obtained from anywhere and at any time. Unlike physical insurance agencies, websites don't have a specific schedule and they are available at any time. Drivers that have busy working schedules, can compare quotes from anywhere and at any time, even at midnight. Multiple choices. Almost all insurance providers, no matter if they are well-known brands or just local insurers, have an online presence. Online quotes will allow policyholders the chance to discover multiple insurance companies and check their prices. Drivers are no longer required to get quotes from just a few known insurance companies. Also, local and regional insurers can provide lower insurance rates for the same services. Accurate insurance estimates. Online quotes can only be accurate if the customers provide accurate and real info about their car models and driving history. Lying about past driving incidents can make the price estimates to be lower, but when dealing with an insurance company lying to them is useless. Usually, insurance companies will do research about a potential customer before granting him coverage. Online quotes can be sorted easily. Although drivers are recommended to not choose a policy just based on its price, drivers can easily sort quotes by insurance price. Using brokerage websites will allow drivers to get quotes from multiple insurers, thus making the comparison faster and easier. For additional info, money-saving tips, and free car insurance quotes, visit https://compare-autoinsurance.Org/ Compare-autoinsurance.Org is an online provider of life, home, health, and auto insurance quotes. This website is unique because it does not simply stick to one kind of insurance provider, but brings the clients the best deals from many different online insurance carriers. In this way, clients have access to offers from multiple carriers all in one place: this website. On this site, customers have access to quotes for insurance plans from various agencies, such as local or nationwide agencies, brand names insurance companies, etc. "Online quotes can easily help drivers obtain better car insurance deals. All they have to do is to complete an online form with accurate and real info, then compare prices", said Russell Rabichev, Marketing Director of Internet Marketing Company. CONTACT: Company Name: Internet Marketing CompanyPerson for contact Name: Gurgu CPhone Number: (818) 359-3898Email: cgurgu@internetmarketingcompany.BizWebsite: https://compare-autoinsurance.Org/ SOURCE: Compare-autoinsurance.Org View source version on accesswire.Com:https://www.Accesswire.Com/595055/What-Are-The-Main-Benefits-Of-Comparing-Car-Insurance-Quotes-Online View photos

to request, modification Contact us at Here or collaboration@support.exbulletin.com